The debate as to whether agent-based or agent-less monitoring is “better” has been answered many times over the years in magazine / online articles, blog posts, vendor white papers and others. Unfortunately, most of these articles are often incomplete, inaccurate, biased, or a combination thereof.

The debate as to whether agent-based or agent-less monitoring is “better” has been answered many times over the years in magazine / online articles, blog posts, vendor white papers and others. Unfortunately, most of these articles are often incomplete, inaccurate, biased, or a combination thereof.

To make things slightly more confusing, different ISV use different methods for monitoring servers and workstations. Some use agents, some don’t, and a small few offer both both methods. But what is ultimately the best method?

What are you monitoring?

First it’s important determine what is being monitored to determine whether an agent-based or agent-less approach is better. For example, collecting system metrics like performance data usually creates fewer challenges then transmitting large amounts of (event) log data. Furthermore, agent-based monitoring is not an option for devices which run a proprietary embedded OS (think switch, printer, …) where you can’t install an agent in the first place.

Consequently I’ll be focusing on monitoring (event) logs with an emphasis on Microsoft Windows in this post. Having developed both agent-based as well as agent-less components in C++ over the years I feel that I am in a good position to objectively compare agent-based with agent-less approaches.

The Myths

Monitoring software is of course not the only type of software that uses agents, a lot of other enterprise software (backup, deployment, A/V …) uses agents as well. Below are some of the myths as to what (monitoring) using agents entails:

Monitoring software is of course not the only type of software that uses agents, a lot of other enterprise software (backup, deployment, A/V …) uses agents as well. Below are some of the myths as to what (monitoring) using agents entails:

- Agents may use up too many resources on the monitored hosts and slow down the monitored machines

- Agents can become unstable and negatively affect the host OS

- Deploying and managing agents is tedious and time-consuming

- Installing agents may require the installation & deployment of dependencies the agents need (.NET, Java, …)

- Installing third-party software will decrease the security of the monitored host

The Reality

It’s understandable that software which is installed on potentially every server and workstation in a network undergoes some level of scrutiny, but would you be surprised to learn that agents excel in the following areas:

| 1. Security: | Better security since agents push data to a central component, instead of the monitored server being configured to allow remote collection. | |

| 2. Reliability: | Agents can temporarily store and cache monitored logs if connectivity to the central monitoring server is lost, even if local logs are no longer available. Agents can also take corrective actions more quickly because they can work in isolation (offline). Mobile devices cannot be monitored with agent-less solutions since they cannot be reached by the central monitoring component. | |

| 3. Performance: | Agents can apply local filtering rules and only transmit data which is valuable, thus increasing throughput while decreasing network utilization. | |

| 4. Functionality: | They offer more capabilities since there are essentially no limits as to what type of information can be gathered by an agent since it has full access to the monitored system. |

The Easy Way Out

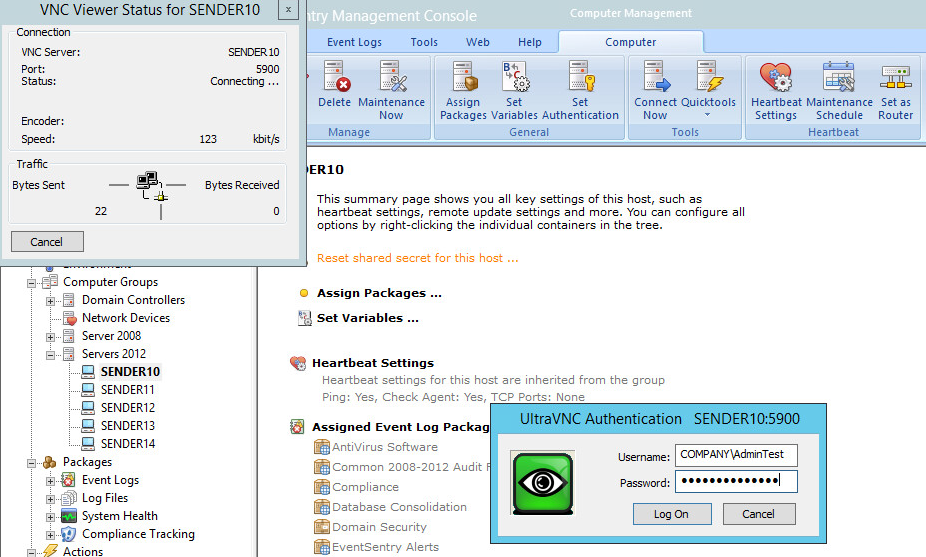

Developing agents along with an easy-to-use deployment mechanism requires a lot of time and resources, so it doesn’t come as a surprise to learn that many vendors prefer to monitor hosts without agents. To compensate for the short-fall, ISV which solely have to rely on an agent-less approach will do their best to:

- Emphasize that they do not use agents

- Persuade you that agent-less monitoring is preferable

The irony, when promoting a solution as agent-less, is that even so-called agent-less solutions do in fact utilize and agent – the only difference being that the agent is (usually) integrated into Windows. Windows doesn’t just magically service remote clients asking for a boatload of WMI data – it processes these requests through the WMI service, which, for all intents and purposes, is an agent. For example, accessing the Windows event logs via WMI traverses significantly more layers than accessing the event logs directly.

Conclusion

With the exception of network devices where an agent cannot be installed, agent-based solutions will provide a more thorough monitoring experience 9 out of 10 times – assuming that the agent meets all the checklist requirements below.

With the exception of network devices where an agent cannot be installed, agent-based solutions will provide a more thorough monitoring experience 9 out of 10 times – assuming that the agent meets all the checklist requirements below.

Some event log monitoring vendors will try to convince you that agent-less monitoring is better & easier (easier for whom?) – but don’t fall for it. We’ve been tweaking and improving the EventSentry agent for more than 10 years, and as a result EventSentry offers one of the most advanced and efficient Windows agents for log monitoring on the market. Developing a rock-solid, secure and fast agent is hard, but it’s the only sensible approach which doesn’t cut corners.

There are situations when deploying a full-scale monitoring solution with agents is not possible, for example when you are tasked with monitoring a third-party network where installing any software is not an option. While unfortunate, an agent-less monitoring solution can fill the gap in this case.

EventSentry also utilizes SNMP (agent-less) to gather inventory, performance metrics as well as other system data from non-Windows devices, including Linux hosts. This collection method does suffer from the above limitations, but since log data is pushed from Non-Windows devices via the Syslog protocol, it’s an acceptable compromise.

Don’t compromise when it comes to monitoring the (event) logs of your Windows infrastructure and select an architecture which scales and offers security & performance.

Technical Comparison

The table below examines the difference between agent-based and agent-less solutions in greater detail.

|

Agent-Based

|

Agent-Less

|

Verdict

|

|

|

Higher network utilization combined with the fact that remote log collection will still utilize CPU cycles on the remote host (e.g. through WMI provider) favors agent-based solutions.

Agent-less solutions have a single point of failure, while agents can filter & evaluate data locally before transmitting them to a central database.

|

|

EventSentry Agents are designed to be essentially invisible under normal operations and do not impact the host system negatively in any way.

|

||

|

Agent-Based

|

Agent-Less

|

Verdict

|

|

|

Agent-based solutions have an advantage since local data can be cached and corrective actions can be executed even when the central monitoring component is unavailable. Cache data & logs are re-transmitted – even if the local logs have been cleared or overwritten.

Agent-based solutions can monitor hosts even when disconnected from LAN.

|

|

The EventSentry Agent auto-recovers if the process aborts unexpectedly, and by default alerts the user when this occurs. When using the collector (default), the agent caches all data locally and retransmits when the network connection becomes available again.

|

||

|

Agent-Based

|

Agent-Less

|

Verdict

|

|

|

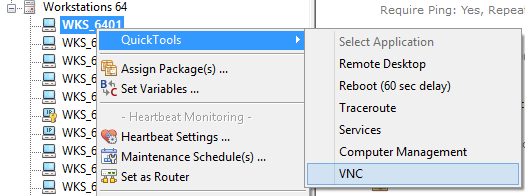

Depends on deployment tools made available by vendor as well as management tools in place for configuring Windows settings. A poorly developed deployment tool would favor an agent-less solution.

|

|

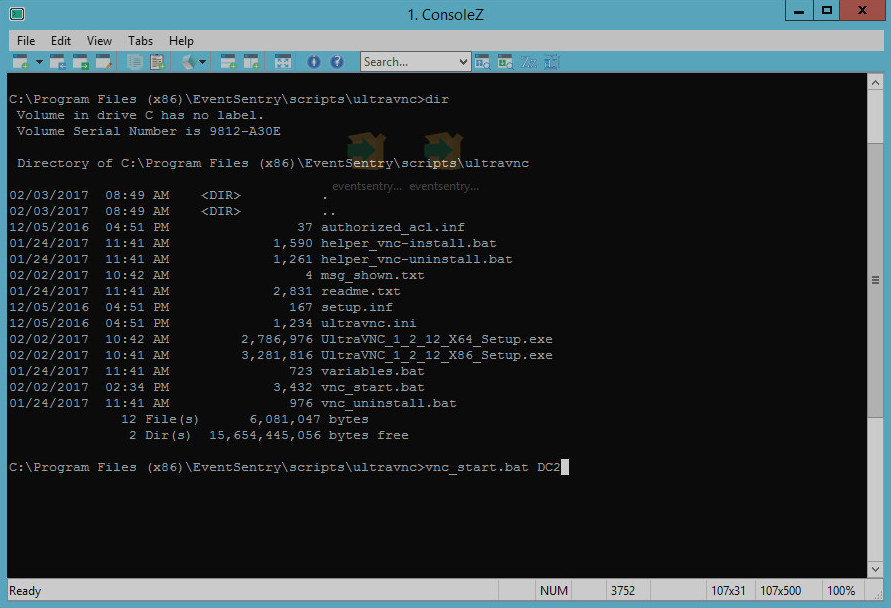

EventSentry Agents can be deployed (multi-threaded) with the management console or through 3rd party deployment software by creating a MSI installer on the fly. When using the collector (default), agent updates (patches) can be deployed automatically.

|

||

|

Agent-Based

|

Agent-Less

|

Verdict

|

|

|

Depends on whether agent has dependencies and whether configuration changes need to be made on the monitored hosts.

|

|

The EventSentry Agent does not depend on any 3rd party frameworks or libraries.

|

||

|

Agent-Based

|

Agent-Less

|

Verdict

|

|

|

Since agent-based solutions do not require permanent remote access and monitored hosts can therefore be hardened more, they are inherently more secure IF the agent doesn’t suffer from an insecure design and/or vulnerabilities.

Agent-based solutions also have more control over how data is transmitted from the remote hosts.

If there is general concern against third-party software then the product in question should be researched in a vulnerability database like http://www.cvedetails.com.

|

|

The EventSentry Agent does not open any ports on a monitored host and resides in a secured location on disk. The agent transmits compressed data securely via TLS to the collector. No major security vulnerabilities have been discovered in the EventSentry agent since its first release in 2002.

|

||

|

Agent-Based

|

Agent-Less

|

Verdict

|

|

|

Agent-based solutions have an advantage since they can utilize multiple technologies to obtain data, including highly efficient direct API access. Agents can also trigger (corrective) actions locally even while the agent is unreachable.

Agent-less solutions can only monitor data which is made available by the remote protocol.

|

|

The EventSentry Agent accesses log files, event logs and other system health data almost exclusively via direct API calls. The more resource-intensive WMI interface is only used minimally, for very specific purposes. Corrective action can be taken directly on the monitored host, often only in milliseconds after an error condition (event) has occurred.

|

||

Appendix: Checklist

When evaluating software that offers agents then you can utilize the check list below for evaluation purposes.

|

Resource Utilization

|

|

An agent needs to consume as little resources as possible under normal operations. With the exception of short (and unusual) peak periods, a user should never know that an agent is running on their server or workstation – period.

The thought of having a resource-hogging agent running on a server sends shivers down the backs of many SysAdmins, and the agents used by certain AntiVirus vendors that rhyme with Taffy didn’t set a good precedent.

|

|

Stability & Reliability

|

|

The agent needs to run at all times without crashing – the SysAdmin needs to be able to go to sleep knowing that his agents will reliably monitor all servers and workstations. Unstable agents are just no fun, especially when they negatively impact the host OS.

If an agent that encounters an issue, it needs to at least auto-recover and communicate the issue to the admin.

|

|

Deployment

|

|

Agent deployment and management needs to be streamlined and easy – it shouldn’t be a burden on the end user. And while agent deployment is important, agent management, keeping the remote agent up-to-date, is equally important and should – ideally – be handled automatically.

Most SysAdmins have enough work the way it is, the last thing they need is baby-sitting agents of their monitoring solution.

|

|

Dependencies

|

|

The more dependencies an agent has, the more difficult it is to deploy the agent. Agents that rely on complex frameworks like .NET, Java or specific Visual Studio runtimes are difficult and time-consuming to deploy.

Furthermore, any third-party software that is installed as a dependency creates an additional attack vector and needs to then be kept up-to-date.

|

|

Security

|

|

An agent needs to be 100% secure and cannot expose the monitored host to any additional security risks. I will explain below why using agents is actually more secure than not using an agent – even though this seems counter-intuitive at first glance.

|

This is where

This is where